To catch a priest

How ad tech data was used to oust a senior Catholic cleric, and how most anyone can do the same

How often have I said to you that when you have eliminated the impossible, whatever remains, however improbable, must be the truth?

-Arthur Conan Doyle, The Sign of the Four

Let’s get the facts of the case out of the way first:

Per a post that originally appeared at The Pillar, a shadowy figure used mobile app data from 2018 through 2020 to show that Monsignor Jeffrey Burrill, the general secretary of the United States Conference of Catholic Bishops, was a regular user of dating app Grindr and frequenter of alleged gay bars.1 Msgr. Burrill subsequently resigned his position as general secretary, and the original Pillar piece has been (re)reported in that usual dogpiled media fast-follow, but without much in the way of technical specifics.

Grindr claimed in a statement that they were not the source of the data, and that such a data breach would be “infeasible from a technical standpoint and incredibly unlikely to occur.” I agree it’s unlikely that the data leaked directly from the app or Msgr. Burrill’s device, but it’s not quite true that data that Grindr generated could not have been used to reconstruct Burrill’s past behavior. I’m going to engage in some informed speculation below on how some lone crusader, armed with data and some hacking skills, could have zeroed in on one man’s behavior over years of time with commercially-available information.

By way of self-introduction: I’ve spent 13 years turning data into money via digital advertising. I built a real-time exchange (like the one involved here) for Facebook, and I’ve even built the bidding machine for the exact ads exchange in question while employed at a large ads buyer. I’ve also worked at Branch Metrics, one of the world’s biggest mobile attribution data companies and warehouses of third-party user data. Which is a long and self-glorifying way of saying, I know this world very well, so I’m telling you how an ad tech insider (or just someone with technical skills and a willingness to read dev docs) could hunt down someone using advertising data. It’s hard, but not impossible. It’ll almost certainly happen again.

The challenge has three steps:

Getting the data

Finding the target

Constructing a behavioral profile based on geographic data

Getting the data

What sounds like the simplest part is actually the hardest.

From my own intuition and what Grindr has publicly stated, this wasn’t the result of an actual hack of the monsignor’s phone, or some data breach on Grindr’s part. Such a breach would have to have been so vast, you’d see echoes of it somewhere else. The way the data got out was very simple and as designed: via the programmatic real-time ads-buying technology, a complex edifice that’s been built brick by kludgy brick for two decades now. Specifically, via the MoPub ad exchange currently managed by Twitter, which is about the only exchange that would work with a somewhat edgy (for brands at least) company like Grindr.

Here’s a (possibly) mind-blowing fact: just about every time you load a web page on a browser, or click to a new experience inside an app, there’s code being run that sends your data to an ad exchange, which then broadcasts that data to hundreds of potential bidders (themselves connected to countless actual advertisers). For every click of yours, picture a dense bundle of data going into the cloud and instantly duplicating into hundreds of copies to thousands of servers, each one accessing millions of rows of data to figure out who you are. That’s the pulsing, arterial information flow of the modern mobile internet, and it beats billions of times a day.

What goes out with every pulse of the attention economy? Well, it’s right there in the MoPub developer docs, as the component objects of what’s known as a ‘bid request.’ The bid request is the packaged snippet of data that blasted out from the computer talking to your mobile device, and thence to the various ‘demand side platforms’ (DSPs, think of them almost like the stock-buying software in high-frequency trading). I’ll highlight the various snippets of data here.

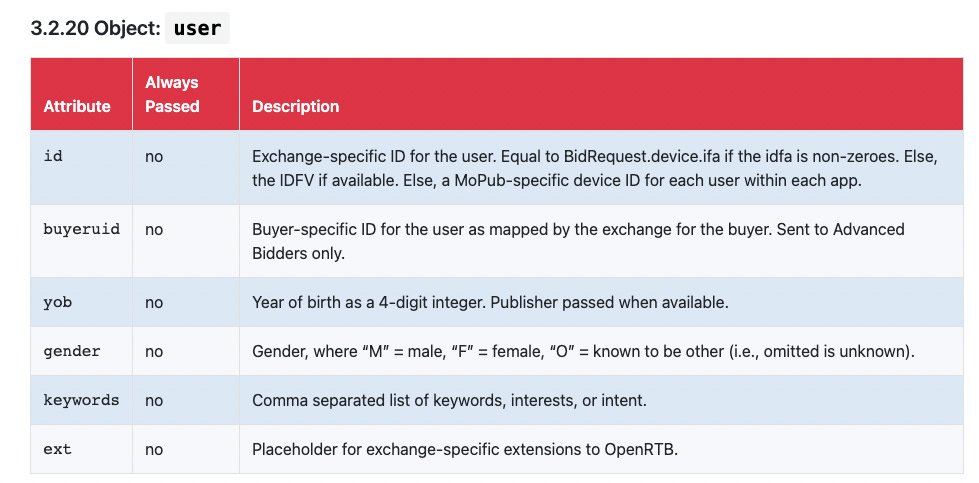

The user object:

Lots of interesting data here, but the key piece for this challenge is the user.id attribute. As for age and gender, Grindr claims it does not pass such user data to ad networks. That’s very likely true. It’s also totally irrelevant to this story.

More on this in a bit.

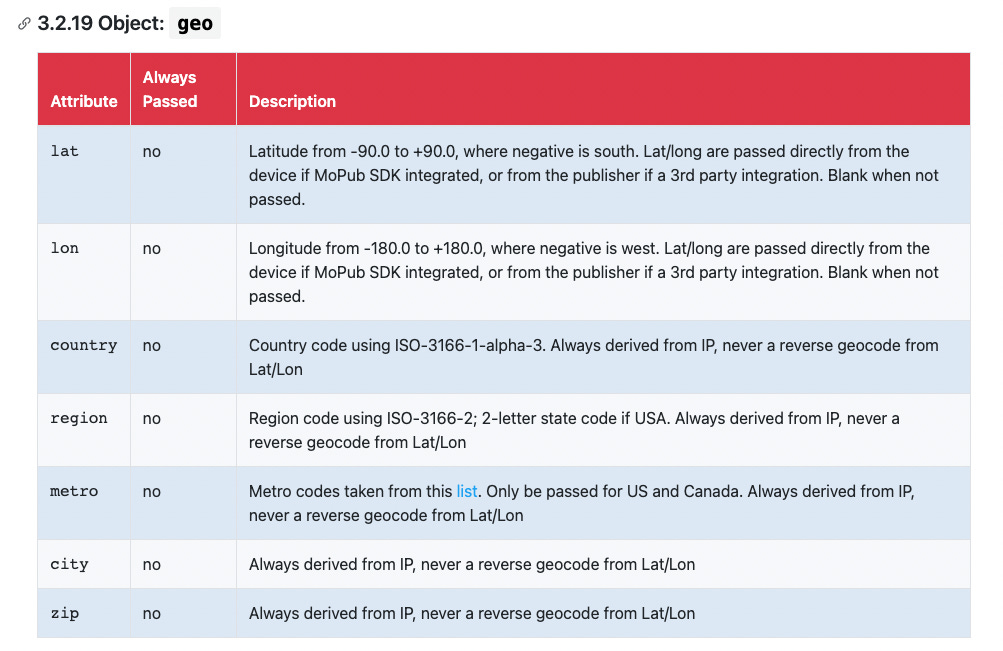

The geo object:

Despite lots of noise around ‘hyper-local targeting’ in the ad tech world at various points in the past, your precise GPS location isn’t terribly useful for most ads buys. Advertisers do care about broad geo targeting like state or city, but nobody is actually trying to show you an ad because you’re on the corner of Scott and Fulton in San Francisco (despite various paranoias to the contrary).

That said, if the app is tightly integrated with MoPub, your latitude and longitude straight from your phone is being passed. Alternatively, the app might be truncating the passed lat/long themselves; per Grindr, their geo data is “within 100 meters of accuracy of your actual location.” They also claim that using IP address (which they do pass) and mapping that to a location isn’t very accurate. It’s actually not as bad as they’re claiming: try it yourself here, and paste the lat/long into Google Maps. It’ll usually be right to within a kilometer or so.

GPS lat/long, either precise or rough, plus IP address, combined with years of data and known locations for an individual, would definitely give you a chance of de-anonymizing the data and finding a target. More on that soon.

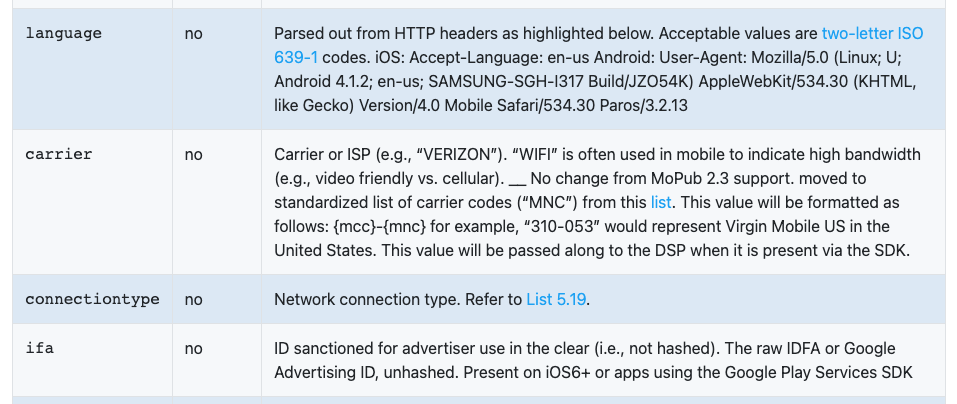

Finally, a piece of the ‘device’ object (with your very important device data):

The ‘ifa’ datum is absolutely key here, it’s not just the linchpin of this story, but all of advertising at the moment. As I mention in passing in a Pull Request piece and detailed at length in the ‘Great Awakening’ chapter of Chaos Monkeys, identity is the underlying battleground of digital advertising.

This ID, in its pure IDFA form (Apple-speak for ‘ID for advertising’), is the ‘primary key’ (to use database lingo) in endless tables of data scattered across countless ads and data companies. Like your social security number, it’s the common ID that ties everything together. Without it, you’d never be able to tie data from app A with data from third-party company B; all the data becomes incommensurable without it. Which is why Apple essentially canceling the IDFA and replacing it with vendor-specific IDs (the IDFV mentioned in the docs) is such a seismic event in mobile monetization (again, read my PR piece about it).

In the analog world of old, someone could burn a few receipts, disappear back into a large crowd or skip town, and leave no trace of a furtive adventure. No doubt, Burrill thought he was doing as much when he closed his mobile app and went back to his ministry; the sophisticated digital technology to replicate the analog world just isn’t there yet.

But let’s leave aside the wonky drama around mobile monetization and get back to this more than slightly underhanded hunt.

Grindr is one of the leading sources of mobile inventory on MoPub (itself one of the largest mobile ad exchanges). That means every Grindr user is spewing a data trail into the programmatic ad space every time the app tries to show them an ad.2

This isn’t something uniquely nefarious; just about every app you use that has ads —other than apps like Facebook, which have their own in-house ad system—is piping their data into exchanges like MoPub. “It’s what pays for the Internet”, ad tech veterans slur to themselves after the fifth drink at an ad tech conference, rationalizing the orgy of mercenary data-trading they facilitate.

So how do we get the data?

Either the attacker was listening to the bid stream themselves for years and years—perhaps they work at or run the sort of demand-side software company that bids on MoPub inventory. Or, more likely, they simply purchased the data from one of many resellers. This ‘bid stream data’ (essentially the data bundles described above, and cached in some queryable format) is resold by people who are nominally only using it to buy ads for advertisers. Is it sketchy and borderline illegal given privacy legislation like GDPR? Yes, entirely. Does it still happen as a sideline to the main advertising data flow? Absolutely.

Note, even ‘bid stream’ data is somewhat limited. This isn’t like being watched by some newfangled Big Brother flying surveillance drones over your head. You’re only picking up a signal when some app is opened and an ad impression triggered. It’s not like you’ve got a second-by-second regular update on the user’s whereabouts, as you do when you share your location via Apple or Google Maps.

Unless of course our stalker bought combined data from an aggregator. There, they take both bid-stream data lifted from exchanges with more contractually-sound but nonetheless sketchy data captured from apps that are marginally increasing their monetization by pumping your data to a middleman. Go read the sales pitch to app publishers here. Who wouldn’t want to “earn passive revenue from your users”?3

In the latter case, our data is enriched by other (non-Grindr) apps, all serving as beacons for where our target is; the geographic picture is richer, but also more complex.

Finding the target

So far, we have an enormous dataset that’s roughly of the form:

[id, datetime, latitude, longitude, app ID, <other data>]You still only know that a given user used a set of apps at certain dates and times in the past. You still can’t associate that with real-world behavior. Some of the columns are also spottily populated and the lat/long data is of varying precision; once again, we’re in the putrid bowels of the data ecosystem after all. How do we find the few needles in this mountainous haystack? How do we find the rows belonging to one Monsignor Burrill?

The real world is where digital anonymity goes to die.

As reported, Burrill is a diocesan priest in Eau Claire, Wisconsin. Where is he every Sunday morning around 10:30 a.m.? Giving mass at St. James the Greater Catholic Church on 11th Street is where (or at one of a few Catholic churches). Sure, if we were to draw a circle a couple hundred meters wide around St. James, and tally up the unique set of IDs that appear there on Sunday mornings, we’d turn up hundreds. But which one is always there, month after month?4

Modern databases allow easy construction of geo-based queries of the form: “find all rows with data within x meters of this lat/long, and from 9 to 11 a.m. on Sundays”. Also, who’s always at whatever rectory Eau Claire diocesan priests reside in, plus whatever public occasions or conferences a senior cleric such as Burrill would surely attend? The geo data can be massive and noisy, but as with any noisy signal, average over enough data and the signal starts coming through. The attacker probably had to look at years of data to unambiguously narrow this down. This attack was long in the making, and took months (at least) to pull off.

Somebody wanted Burrill and possibly others to go down. That’s the real mystery in this story: Who? Why?

Constructing a behavior profile

Ok, so we’ve finally got the right IDFA, so we can pick out only data points from that device, and dump the mountain of unrelated data that’s been making our Spark jobs get bogged down.

What we’ve got now, for one device is row after row of either ad auctions with pretty rich user and device data, or just the device ID with lat/long from whatever random apps sell their data. Fortunately, with the ‘cardinality’ of our data vastly reduced by limiting all the data to one device, plus time stamps, we can plot the data and try to thread together a trajectory. Here’s Google Maps doing it for my timeline back when I was on Android. The attacker’s version of this would look about the same, but more scattered, with a very jumpy connect-the-dots feel.

If we’ve got years of data, this is going to be a slog to ocularly examine.

What I would do is compile a list of ‘hot spots’—locations we think are associated with the behavior of interest—and simply join between that table of locations and our large table of ads data. Again, modern databases handle lat/long data seamlessly. Logic-wise, it’s effectively defining the equality operator for a tabular join to be ‘within x meters of each other’, and finding where two sets of points functionally overlap, one the set of the target’s locations and the other the incriminating locations. The hardest part is compiling the list of locations we want to prove our target visited. Given our attacker’s apparent obsession, you could just manually copy/paste the lat/long from a set of Google Maps results, or write code that ‘scrapes’ (i.e. programmatically fetches by pretending to be a user at a browser) the coordinates of a set of businesses (mooching data off Yelp for example). The coverage actually named a specific bar in Vegas, so it’s clear the attacker had a set of key locations he was trying to pin on Burrill.

Once we’re sure we have the right IDFA, and once we’ve done the hackery around incriminating locations, we’re essentially done. According to The Pillar, they had some sort of ‘independent expert’ vouch for the data and approach. All that person likely did was look at the cleaned data, see that it jibed with mobile data as it’s currently sold, and maybe did a sanity check on the geo-data joins.

It’s worth noting that if the attacker got the wrong device ID to begin with, the entire story is hogwash.

The hacker

So who did all this?

According to the Catholic News Agency, some shadowy lone figure approached the Church in the past, claiming to have deduced the behavior patterns of various Catholic clergy. The church told him to get lost, but at some point that figure’s pitch landed with The Pillar, and the rest is scandalous history.

This story triggers a ‘sketch’ reflex in me: who is really behind this convoluted hit job? Because that’s exactly what this was. None of this requires ninja-level technology or skills, but it’s way beyond the level of the Googling around or spreadsheet hacking of your average normie conspiracy-theorist or journalist. Particularly if the hacker had done all this analysis for several targets over years of time; that’s a considerable amount of work and expense (this data certainly isn’t free, and they bought a lot of it). Somebody wanted Burrill and possibly others to go down. That’s the real mystery in this story: Who? Why?

The legality and privacy issues, or, what is to be done?

Most of the coverage stressed that the data was obtained ‘legally’, in that our hacker didn’t plant malware on their phone, or steal it, or otherwise do criminally shady stuff. This implies the hacker bought the data, rather than lifted it from a DSP or put malware on the target’s device. But even then, such data collection almost certainly violates MoPub’s terms of service, certainly in spirit if not in law. Per Grindr, it also violates its agreements with advertising partners. MoPub has no particular incentive to seed a third-party ecosystem with data that it doesn’t monetize. More conscientious publishers like Grindr also have no such incentive (though they have an incentive to run ads).

Consider the rivers of pixels that have been written on the supposed advertising depredations of Facebook—a company with a first-party user relationship that’s definitely incentivized to not sell data—when the real shady shit is going on with companies you’ve never heard of.

The problem is that programmatic advertising, while perhaps the most technically sophisticated way to buy and sell ads, is leaky when it comes to data, almost necessarily. The entire goal of the machinery is to allow advertisers equal ability to target users and optimize their ad spends as well as the big players like Google and Facebook do. Despite the shady nether regions (and I’d be the first to say they exist), the programmatic machinery and the third-party ecosystem that’s sprouted around it are a form of market competition for incumbents who would otherwise dominate totally (rather than just mostly).

I’ve said it before, but I’ll say it again: this attack isn’t how the ad tech world actually works! Nobody is trying to thread together your behavior timeline, or listen to your microphone, or any of that conspiratorial BS. Your detailed trajectory through the world just isn’t worth much, and there’s no advertising business model around this sort of targeting.

That said, given the shady world of third-party data brokers, who indeed try to monetize the data exhaust from the programmatic advertising world, hackery like the above is certainly possible….so long as those third-party vendors exist. Consider the rivers of pixels that have been written on the supposed advertising depredations of Facebook—a company with a first-party user relationship that’s definitely incentivized to not sell data—when the real shady shit is going on with companies you’ve never heard of.

This is also all a bit moot: Apple has deprecated IDFA, and Google will surely do the same with its analogous GAID. Even after Apple forces exchanges like MoPub to use vendor-specific IDs like IDFV, the data will still be joinable within that vendor’s bid stream. That said, cross-vendor and publisher joining of data (like we did in this attack) will be impossible, save for in the fuzziest of ways. We will indeed be in a more privacy-safe future as the incumbents retire the ability to identify and track individual users, but at the expense of any non-incumbent competition. Whoever was hellbent on ruining Burrill will not be able to do so as easily in the future; that said, any entrepreneurs who venture to undermine the Google/Apple duopoly will find themselves similarly hamstrung.

When Apple and Google finally go beyond just canceling user-specific IDs, and go all the way to moving all targeting data on-device, this debate becomes even more moot as nobody outside those two companies will have much in the way of user data. In a fully on-device world, even Google and Apple don’t know much about you, as the user data that leaves the phone couldn’t be used (even in theory) to narrow you down below a coarse segment size.

Then, and only then, will we be back in the analog world of old where someone could burn a few receipts, disappear back into a large crowd or skip town, and leave no trace of a furtive adventure. No doubt, Burrill thought he was doing as much when he closed his mobile app and went back to his ministry; the sophisticated digital technology to replicate the analog world just isn’t there yet.

Let me tell you what I won’t be talking about here: the ethics of outing, Catholic Church policy around its clergy, or any of the other complex but non-technical threads the media coverage jumped on with glee. These topics are entirely above my pay grade, or outside my lane, or pick your favorite directional metaphor. The world would be a happier place if people embraced epistemic humility, and didn’t comment publicly around things they know little or nothing about.

In fact, we should embrace a piece of product-manager trickery called ‘non goals’: things you explicitly set out to not do in a given project, as way to ward off accidentally doing them to the detriment of the product. Herein I submit to you my ‘non goals’ in this piece.

The fact the data is coming from Grindr is also part of the data. It’s passed as a unique app ID, and typically used for either targeting (or anti-targeting) certain publishers. To those of a Catholic persuasion, the mere fact this user is associated with Grindr is itself incriminating.

To app publishers, every dollar they make off you, either via fees, ads, or resold data is calculated out to six decimals and analyzed as part of the ever-important LTV (lifetime value), which is broken down into a vast panoply of segments, inbound channels, and signup cohorts. The traffic in data and pixels for dollars is a very exact one. Why else do washed-up physics grad students and Wall Street quants like me end up in it? We wanted flying cars and instead we got real-time markets in human attention. Hate the game, not the player (or at least not this former player).

If we’re really evil, we can be pro-active about it. Create a dummy Grindr account with a fake, attractive photo, and swipe left (with location set to Eau Claire) until we find a photo of Burrill. Then engage with him in the evening when he must be at the rectory. When he sees the notification and responds, Grindr will start up and send out another pulse of data to the ad exchanges. The row of data with that timestamp and with roughly that geo will almost certainly be his device.

"The world would be a happier place if people embraced epistemic humility, and didn’t comment publicly around things they know little or nothing about. "

Took me a couple decades to figure that out.

I was working for a geospatial data company and we could do things like this all the time. At that time you could set up a server to pick up almost all the geo-tagged twitter data for free (it was bout 2-3% of the firehose). You would see geo-tagged tweets in one city and the user would pop up in another and you could put him on the exact plane they were on. Its really simple stuff if you have the data.

As for mapping across platforms, in another project I was able to map users who had filled in surveys in one theme park and see them go to another theme park during their stay in<state redacted>. I was mapping name-anonymized in-park data to super shitty cell-phone pings to their travels to other venues in <state redacted>, all without ever knowing their names, using data from two completely different vendors with different ID's. (The cell data even rotated their numbers every month, but . . . come on!!!) Even in a busy theme park with densities as high as most cities, you only needed a few cellphone pings to connect them across platforms. We mapped one group all the way to their flights coming and going, along with their shameful trip to Seaworld.

As for the priest who got caught, there are many motivations:

Looking for pedophile priests, as per the 2004 John Jay report, the frequency of actual pedophile priests in the priest population is about the same as the rest of population. Most of the cases of abuse was done by openly or not so open homosexual priests, who used the cover of their church to commit various degrees of sexual abuse on teenage and older people. So they are a fair target. These are people who have forsworn their 'right to privacy' and they should be held to that standard even if they stay within the ever-widening bounds of the law, while they remain priests, not by legal authority but certainly by their parishioners.

On a personal level, this is a horrible thing to happen to anyone, and you wish that priest had come to his senses all by himself, but one should not minimize the damage that priests who were acting like him did throughout the sexual abuse crisis. A climate of corruption spreads the bounds of acceptable behavior in any institution or society, allowing far graver sins to fluorish.